Import torch_optimizer as optim # model =. Paper: AdaBelief Optimizer, adapting stepsizes by the belief in observed gradients (2020) parameters (), lr = 1e-3, betas = ( 0.9, 0.999 ), eps = 1e-3, weight_decay = 0, amsgrad = False, weight_decouple = False, fixed_decay = False, rectify = False, ) optimizer. Paper: On the insufficiency of existing momentum schemes for Stochastic Optimization (2019) Paper: Optimal Adaptive and Accelerated Stochastic Gradient Descent (2018) Training logic is ready and baseline scores are established, swap optimizer and If you do not know which optimizer to use start with built in SGD/Adam, once On your particular problem and see if it improves scores. Require explicit learning rate schedule etc. Have unique properties and may be tailored for different purposes or may python examples/viz_optimizers.py Warningĭo not pick optimizer based on visualizations, optimization approaches Is very easy to extend script and tune other optimizer parameters. Learning rate is best one foundīy hyper parameter search algorithm, rest of tuning parameters are default. Its large search space and its large number of local minima.Įach optimizer performs 501 optimization steps. Rastrigin function is a non-convex and has one global minima in (0.0, 0.0).įinding the minimum of this function is a fairly difficult problem due to Problems to follow valley which is relatively flat. OptimizationĪlgorithms might pay a lot of attention to one coordinate, and have ToĬonverge to the global minima, however, is difficult. Rosenbrock (also known as banana function), is non-convex function that has Rosenbrock and Rastrigin benchmark functions was selected, because: Interesting insights into inner workings of algorithm. Situations like: saddle points, local minima, valleys etc, and may provide Supported help us to see how different algorithms deals with simple Or use github feature: “cite this repository” button. Please cite original authors of optimization algorithms.

#Ram optimizer install#

Installation process is simple, just: $ pip install torch_optimizer Documentation Simple example import torch_optimizer as optim # model =.

#Ram optimizer free#

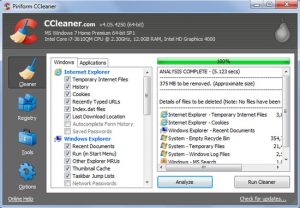

Reduce Memory is a free application for Windows XP and later.Torch-optimizer – collection of optimizers for PyTorch compatible with optim If it does help, and you need something more configurable, take a look at Mem Reduct. Memory optimizers aren’t nearly as useful as they pretend, then, but if you’re interested in trying one out for yourself, Reduce Memory is the simplest possible way to get started. And of course, if your system is short of RAM then even very small savings might help. This won’t necessarily be the case for everyone - it all depends on your applications and how they’re being used. We went back to browsing (without opening any more tabs), collected our emails with Explorer, and a few minutes later RAM use was up to 2.9GB.

#Ram optimizer Pc#

We left Task Manager running, came back to the test PC a couple of minutes later, and the RAM "in use" figure had risen to 2.6GB. The freed-up 0.8GB RAM wasn’t empty, it would have held all kinds of files and data, and if our apps needed that information again they’d probably have to reclaim the RAM and reload it from disk.

0 kommentar(er)

0 kommentar(er)